From Zero to Hero: The Policy Tests Journey!

What are tests?

Test, test, test... and test...

Our manager keeps asking the same question all the time - did we test our feature enough? Did we cover all the corner cases? Is there any regression? Are you confident about your changes? bla bla bla... 🙂

But what does testing mean?

Applications need to perform specific actions when given some input. These actions performed by the application need to be evaluated and verified for desired output. There are two ways in which we can perform this evaluation:

Either we give some input to the application and wait for it to produce some output, which we then manually verify against desired output.

Or, we can write some code that does the output verification for us automatically.

Either way, this process is called testing. Manual testing takes human effort and is relatively slow, not to mention, there are always chances of forgetting some critical tests.

At Fyle, like many early-stage startups, we never got to writing tests. As a result, we didn’t even have a single test until this initiative. Finally, my colleague Irfan Alam and I changed this with automated tests. Irfan is our superhero who worked with me to achieve this milestone.

In this blog, we share the story of how we added tests support to a 5-year-old micro-service with 4K+ lines of code and 600+ commits. We also cover the tools we used, the various problems we faced during dev, how we solved those problems, and the results we got post this mammoth initiative. So, let's get started!

Testing is crucial in any application to ensure that it behaves under the specified states. When we add new features or solve bugs in any application under development, we don't want the existing functionalities to get affected. We should not break other things in an attempt to fix/build on the current thing.

With tests, we know that our application will keep on behaving the way it is supposed to. There are many types of tests, including unit tests, feature tests, integration tests, API tests, performance tests, etc. In our case, we are going to talk about API-level testing.

Our application Fyle is an expense management software that helps businesses track their expenditures and enables their employees to get seamless reimbursements for their spending on behalf of the company. Policy is an essential feature of Fyle since the admin of an organization depends on it to enforce his org policies for expenses made by their employees. If not enforced properly, it can put the entire business at risk.

Problems

"We have completed the new feature on time!" Irfan cheered.

"Can we leave it on staging for a week or two, just in case" - we asked nervously.

Before policy tests, there was always this feeling of uncertainty - fear of breaking something. So we would test it rigorously with the help of other devs and leave it on testing environments because we feared the regression. As a result, the code changes didn't take as much time as the testing itself took, leading to delayed policy releases and bug fixes.

We had to test a never-ending and very diverse list of policies. It was challenging to test them one by one before each release. Also, we would just have to fall back on our luck (sometimes our team lead would vow to God) after manual testing.

We had to come up with some way of dealing with this never-ending misery and feeling of fear because, in this case, "beyond our fears lied the uncertainty of breaking code."

We decided to fix this problem forever with automated tests. But this was a problem because policy, as expected, had a range of services it depended on for its working. Also, there was a question of how and what all items needed to be included in tests.

What did we do?

Organizing testcases

This is how we organized our tests. We created different “testcases” files covering the different kinds of policies possible.

What are these Testcases files?

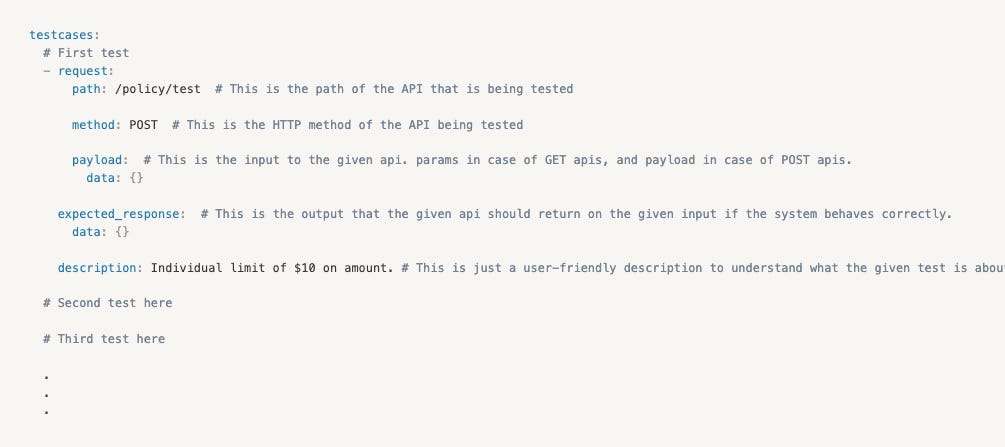

Each testcases file is a YAML file designed to test a certain kind of policy behavior. It may contain one or more tests. This is what a testcases file looks like:

So a single test (or request) would contain a method which is a type of request (GET or POST), path (API path which the client needs to hit), data (Input), expected output, and description. We would enclose this test case under test cases in the YAML file, which can contain multiple test cases.

Selecting tests to run

We used Python’s pytest module to write tests.

After many discussions, the best way we found to execute tests was to have a single function to run all the tests and then use pytest’s @parameterize decorator to supply the different sets of parameters to this single function.

We used flask’s test client to mock the HTTP request based on the current test running from the YAML file.

This setup solved all of our problems, but one - the collection of tests. Policies in our system can be of two types:

form policies: policies created in a user-friendly form

JSON policies: policies created by JSON language

All our testcases files were stored in one of the two directories - the first directory contained testcases for form kind of policies. The second directory contained testcases for JSON kind of policies.

Now, based on the changes we made, we would want to run testscases from just one of these directories. But the default behavior of pytest's @parameterize is to run all the tests from the root directory.

pytest package provides many useful hooks which can be defined in conftest.py. We decided to use the pytest_generate_tests and pytest_addoption to solve our problem. These hooks are called by pytest before running the tests.

We would define and store the --policytype optional custom flag's value using pytest_addoption, and inside pytest_generate_tests, we would call the helper function to only return the tests in the directory passed by this flag in case we want to run specific types of tests. By default, all the tests from both the directories would run.

Coverage

Code coverage removes the blind spots in code by bringing a metric to the tests. It quantifies our tests and shows how much codebase is captured by our testcases. By maintaining good coverage, we can ensure that all of the code going into production is tested well.

Writing tests is one thing and knowing if the tests cover all the crucial parts of code is another. So while writing tests, we had to know that they cover a significant part of the codebase.

We would use the very powerful plugin pytest-cov, along with pytest, to show tests coverage. It also generates a beautiful HTML report that highlights both covered and uncovered parts of code during the running of tests.

The challenges we faced during tests development

We initially went with covering those functions which are used in most common policies. There was significant code coverage during the first couple of days. As we went further with writing tests, we would have to spend a lot of time covering even 1-2% of code in tests. This happened because as we progressed to cover new policies, many functions would get repeated for which we have already got the coverage. So with each new policy test, the number of new lines that would get covered started to decrease.

Also, writing tests were not always straightforward - many a time, something would break in-between. When we have this kind of scenario, we initially would check the terminal for output and see where it is breaking. Soon, we could not debug the breaking tests because of so many things happening in the terminal. So then we started putting the logs into the file by routing terminal output and then searching for breaking tests in the log file.

There was a lot of struggle to cover the last 20% of the code. We had to put our thinking caps on to win those red-marked lines. As we were struggling with the last 20%, our remote services started rejecting requests due to many requests while running tests.

We tried to scale up the services, but still, they were being rejected. We then had to put sleep in between each test to get rid of this issue temporarily. Finally, when we were done with writing tests, we had to bring up local services before the policy hit test clients. This was achieved by using pytest_configure hook in our beloved conftest.py file. We used this hook to call shell script to bring up other services.

Results

Finally, the moment arrived when we ran the tests, and it showed 96% code coverage. That moment was iconic and one of its kind! We virtually hugged each other, and our manager was crying (happy tears, obviously.)

We started with writing tests. But while writing tests, we ended up cleaning redundant and unused codes. We also fixed many bugs which couldn't be reproduced by any means so far. As a result, the policy code went from the most blamed code (for bugs) to one of the cleanest codes (pre-Diwali cleaning) in Fyle codebase.

We are now more confident than ever while shipping releases and bug fixes. Also, our manager is finally happy and finds time to play with his cat.