Speeding up Docker build in CI/CD

“Efficiency is doing things right; effectiveness is doing the right things.” – Peter Drucker

Hello, I’m Satyam Jadhav, I work as a Senior Member of Technical Staff at Fyle.

I recently worked on an initiative to reduce our CI/CD pipeline’s execution time, especially unit tests workflow which runs on every pull request of our Platform API service built using Flask. It was taking 6 minutes and 30 seconds to complete, running 2000+ unit tests. Goal was to get it reduced by 40 to 50%.

We use GitHub Action for our CI pipeline and Docker for containerisation. Initially we had the below workflow, in which we used to run our unit tests in 4 sets using matrix strategy.

Execution time analysis

I began by analyzing where each second of the total runtime of 6 minutes and 30 seconds was being spent.

Test set: 5 minutes 30 seconds (85%)

Docker build: 2 minutes (31%)

Running test and coverage export: 3 minutes 30 seconds (54%)

Combine coverage: 30 seconds (8%)

Github runners overhead: 30 seconds (7%)

Docker Build step was common in all sets and was taking 31% of the total set execution time. Reducing the execution time would decrease billable time by 4x. This would enable us to increase parallelization without additional costs. Thus, it made sense to focus on reducing the time taken to build the Docker image.

Don’t build what you don’t need

We needed the DB migration service to create the database schema for the Platform API service. Our schema migrations are just simple SQL files having DDL statements which are executed using alembic framework (We chose not to use the auto-generate migration feature for better control).

Both Platform API and DB migration services are Flask-based Python applications. We ran migrations directly from the Platform API service, eliminating the need to build the DB migration service.

I wrote a separate Docker Compose file specifically for GitHub Actions, removing the dependency on the DB migration service from the Platform API service. Attached DB migration service’s codebase as volume to the Platform API service and modified command to run migration at the beginning. This saved 30 seconds i.e. 9% of the total time.

Cache what you have already build

The build step for the Platform API service took 65 seconds.

Pull base python Image: 23 seconds (6%)

Build service: 14 seconds (4%)

Export and load Image: 28 seconds (7%)

37 seconds were spent pulling the base image and building the service, which involved many repetitive steps. Fortunately docker’s build-kit has the provision for exporting build cache to external locations.

Out of available options GitHub cache backend and registry cache backend suited our use case. By caching build steps using the GitHub cache backend, I achieved the following results:

Cache miss: 91 seconds (+7%, 24 seconds of cache upload overhead)

Cache hit: 47 seconds (-5%, 3 seconds of cache upload overhead)

# pytest/action.yaml

name: Pytest

description: Runs pytest

runs:

using: "composite"

steps:

...

- name: Expose GitHub Runtime

uses: crazy-max/ghaction-github-runtime@v3

- name: Set up Docker Buildx

uses: docker/setup-buildx-action@v3

- name: Build service

shell: bash

run: |

docker buildx build --load . --cache-to type=gha,mode=max,scope=image2 --cache-from type=gha,scope=image2

...In case of cache hit, we are able to save 18 seconds i.e. 5% of the total time.

Pull base python Image: 1 seconds (1%)

Build service: 3 seconds (1%)

Docker Buildxsetup overhead: 7 seconds (2%)Cache upload overhead: 3 seconds (1%)

Export and load Image: 28 seconds (7%)

Although this is a decent improvement, pull requests often lead to more cache misses than hits due to frequent code changes in Docker build steps. To solve this problem I wrote new Docker file specifically for GitHub action, where I removed all frequently updated code files from docker build steps and attached those as volumes to docker container.

Build once and use multiple times

28 seconds were spent exporting and loading the image into the local image store. When loading an image locally, it must be decompressed and fully reconstructed from a tarball, which is a CPU- and I/O-intensive process. In contrast, a registry push works with compressed formats, optimizing the entire operation. So it is actually faster to push/pull the image to/from a registry, than handling it locally.

With code files removed from the Docker build steps, the image rarely updates, so pushing it takes almost no time, and pulling the image takes around 20 seconds, saving 8 seconds i.e. 2% of the total time.

Pushing the image to the registry allowed us to consolidate the Docker build step, saving billable time. Once the image is pushed, it can be used in the parallel jobs without need to build it in each job. To do the same, I tagged image with Git commit hash and passed it in docker compose file as environment variable.

# workflow/pytest.yaml

jobs:

docker-build:

name: Building image

runs-on: ubuntu-latest

steps:

...

- name: Export commit hash

id: short-hash

shell: bash

run: echo "short_hash=${GITHUB_SHA::7}" >> $GITHUB_ENV

- name: Build service

shell: bash

run: |

docker buildx build --tag <registry>/<image>:${{ env.short_hash }} --push . --cache-to type=gha,mode=max,scope=image2 --cache-from type=gha,scope=image2

pytest:

name: Running Pytest / ${{ matrix.set_name }}

needs: docker-build

strategy:

fail-fast: false

matrix:

include:

...

steps:

...

- name: Pytest

uses: ./.github/pytest

... # pytest/action.yaml

name: Pytest

description: Runs pytest

runs:

using: "composite"

steps:

...

- name: Run pytest

shell: bash

run: |

IMAGE_NAME=<registry>/<image>:${{ env.short_hash }} docker compose run --rm platform-api-github-action-pytest

...# docker-compose.yaml

version: '3.8'

services:

database:

...

platform-api-github-action-pytest:

image: ${IMAGE_NAME}

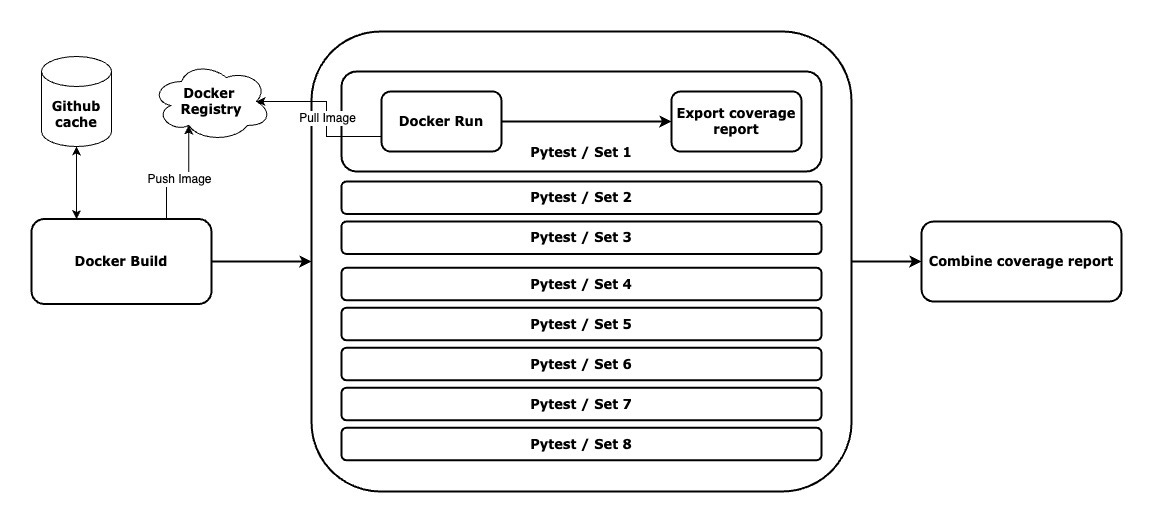

...Below is the final workflow. We saved 54 seconds, i.e. 14% of the total time, and 6 minutes of billable time, i.e. 27% of the total billable time. By utilizing the saved billable time, we increased parallelization from 4 sets to 8 sets, reducing the overall time by 132 seconds, i.e. 34% of the total time.

Takeaways

Optimize for Cache Hits: Attach frequently updated files as volumes to the Docker container instead of including them in the build steps. This approach increases the cache hit ratio for Docker builds, reducing redundancy and execution time.

Use a Central Registry for Images: Instead of loading and exporting images locally, push and pull them from a Docker registry. This not only reduces CPU and I/O overhead but also allows for better reuse of images across parallel jobs.

Consolidate Build Steps: By pushing images to a registry, the image can be built once and reused across multiple jobs, further improving efficiency and minimizing redundant processing.