Stream PostgreSQL CDC events via PGEvents

Background

PostgreSQL introduced a feature called logical decoding in version 9.4. This poorly-named feature allows us to do some very cool things like build a stream of events corresponding to changes to the table. Imagine something like this:

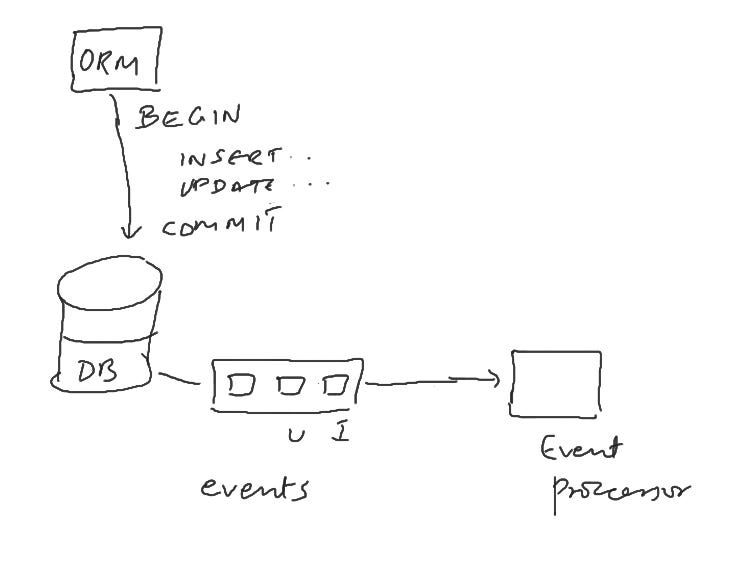

You have your ORM layer that connects to the DB and inserts, updates, deletes rows in a DB transaction. Now imagine you are able to run an event processor that receives these changes once the DB transaction is committed i.e. the changes are final. You’ll receive the complete row data including old and new value in case of an update to an existing row.

Before you let out a yawn, let me elaborate on why this is super-interesting.

Why is this interesting?

Asynchronous work is a very critical part of any SaaS application. Some examples are:

Send a welcome email to a new user

Generate a CSV output

Save audit logs for debugging and compliance. This is a very common ask from SaaS products.

Historically, most ORM frameworks allow you to hook into DB transaction lifecycle to trigger async work. Examples include Django’s pre_save and post_save signals. Here’s a nice article that shows how to link Django’s signals to Celery and do asynchronous work.

This is a bit restrictive. Imagine that you have 10 microservices. If these microservices are written in different languages / frameworks, the developer has to figure out a way to do this in 10 different code-bases. Furthermore, if a row is modified by a SQL script, those changes don’t even go via the ORM and are completely missed.

Using PostgreSQL to do the heavy-lifting decouples the generation of the events from the ORM layer completely and is more robust.

Here’s an rough diagram of how we use it to do async work at Fyle.

Introducing PGEvents

This method of streaming CDC events has been running in production at Fyle for about 2 years and decided to open-source it under the MIT License as PGEvents.

The README is pretty self-explanatory and you should be able to give it a spin within a few minutes on your laptop using docker-compose.

If you’re too lazy to try it out, check out this little gif.

Running PGEvents in production

As we all know, running stuff in production is a whole different ballgame. You’re likely using AWS or some other cloud provider. You’ll need to look up their documentation on how to get your Postgres DB ready. The steps for Amazon’s PostgreSQL RDS are listed here.

As with any queue, you may have a situation where your producer pushes lots of events but the consumer takes a while to process them. This can cause RabbitMQ to bloat up leading to OOM issues. With CDC events a large update, like a table migration can lead to a flurry of events. You might want to consider how to skip these events.

If you’re interested in giving it a spin, have questions, or want to contribute, do ping us! If you’d like to work on stuff like this, then hit me up on twitter @k2_181.

HI Siva, great article! CDC is one of the core patterns these days in async event based systems.

We use Mongo with confluent kakfa in production for something similar, both of which provide this integration seamlessly with Mongo changestreams and Kafka connectors. I am wondering why you chose RabbitMQ as opposed to kafka for this?